Class for interpolation transfers (for example, between CPU domains in parallel simulation or between meshes in multigrid-approach). More...

#include <interp_transfer.h>

Classes | |

| struct | comm_t |

| manages two buffers: to send (receive) value to (from) another processor More... | |

| struct | irequest_t |

| interpolation request More... | |

| struct | reqpair_t |

| first element - bufer for MPI_Request in MPI_Send, second element - buffer for MPI_Request for MPI_Recv More... | |

Public Types | |

| typedef base_tt::val_t | val_t |

| type of interpolated value (for example, double or complex) | |

Public Member Functions | |

| void | AddDefaultTimers (const std::vector< int > &p_id) |

| connect internal timers hierarchy from log to external timers p_id | |

| int | set_blocking_mode (int mode) |

| 1 = complete transfers after starting | |

| int | test_rank (Vector_3 &pos) const |

| identify node which mantains given position | |

| iterator | begin () |

| returns nonlocal packer iterator ensures that recording is correctly finished | |

| iterator | begin_local () |

| returns local packer iterator ensures that recording is correctly finished | |

| iterator | end () |

| returns nonlocal end | |

| iterator | end_local () |

| returns local end | |

| size_t | packed_size () const |

| returns packed size in bytes | |

| size_t | data_size () const |

| returns full unpacked sequence size in bytes | |

| size_t | mpi_size (int out=0) const |

| gets total buffer sizes (for info) | |

| size_t | recv_size () const |

| full size of received data including local transfers | |

| size_t | send_size () const |

| full size of sent data including local transfers | |

| size_t | full_data_size () const |

| full transfers size, including local (counted once) and mpi in both directions | |

| int | register_buffer (val_t *bufp, val_t *bufp_vec=nullptr, int meshid=-1) |

| register destination buffer array where transfered data should be collected the pointer bufp is not managed (must be deleted externally) | |

| val_t * | substitute_buffer (int bufnum, val_t *ptr, val_t *ptr_vec=nullptr) |

| substitute pointer at the buffer array, see also register_buffer | |

| val_t * | get_buffer (int bufnum) const |

| gets buffer with given number | |

| int | put_request (int ibuf, index_t ind, const arg_t &arg, int action) |

| record argument (arg), index ind at the buffer ibuf, where value (corresponding to arg) should be transfered action: | |

| int | put_vrequest (int ibuf, index_t ind, std::vector< arg_t > &argsum, coef_t c_sum, int action) |

| the same as put_request, but summed up value for all arguments multiplied on c_sum will be recorded at the buffer | |

| int | put_full_request (int ibuf, int &ind, const Vector_3 &pos, int action=1) |

| put requests for all possible arguments at position pos | |

| int | put_full_request (int ibuf, int &ind, const Vector_3 &pos, const Vector_3 &domain_shift, int action=1) |

| put requests for all possible arguments at position pos setting domain shift | |

| size_t | get_full_data_size () |

| return size of values for all possible arguments | |

| void | set_full_data (int outtype_) |

| use less than 3 components of E and H fields in put_full_request (not implemented) | |

| int | transfer_all_interpolation_requsets () |

| make negotiations for interpolation requests | |

| int | prepare_transfers () |

| make transfer negotiation (implementation: commtbl and MPI-procedures are inintialized) | |

| int | compute_local (int async_depth=0) |

| makes non-mpi part of transfers | |

| void | start_transfers (int async_depth=0) |

| prepare output buffers and start non-blocking mpi-transfers | |

| void | complete_transfers (int force=0) |

| complete non-blocking mpi-transfers | |

| void | remap_async_interpolation (const std::map< indtype, indtype > *indmap=nullptr) |

| remaps all interpolations according to new buffer indices. The map is mesh_ind->new_buf_ind | |

Public Member Functions inherited from apComponent Public Member Functions inherited from apComponent | |

| void | InitComponent (const apComponent &other, const std::vector< int > &p_id=std::vector< int >()) |

| borrow settings from other component | |

| virtual void | DumpOther (const apComponent *comp, bool lim=false) |

| dump some component using dumper | |

| virtual void | DumpOther (int flags, const apComponent *comp, bool lim=false) |

| dump some component using dumper and checking bit flags | |

| virtual void | Dump (bool lim=false) |

| dump itself using dumper | |

Protected Types | |

| typedef void | request_t |

| number of created object, used in logfile | |

Protected Types inherited from apComponent Protected Types inherited from apComponent | |

| typedef std::set< apComponent * > | registry_t |

| name used in dump and runlog files | |

Protected Member Functions | |

| void | init_mpi_table () |

| allocate output and input arrays in commtbl and initizlize corresponding MPI_Send and MPI_Recv procedures | |

| void | free_mpi_requests () |

| deinitizlize MPI_Send and MPI_Recv procedures (initialized in init_mpi_table) | |

| template<int Direct = 0> | |

| int | fill_buffers (iterator it, iterator e, int async=0) |

| fills (destination or mpi-output) buffers with interpolated values | |

| int | fill_buffers_val (iterator it, iterator e, val_t v) |

| fill all buffers with some value (for debug needs) | |

| int | transfer_requests (std::vector< typename comm_t::packer_t > &secondary_dests) |

| processes requested interpolations (they are recorded at rankvec). | |

Protected Member Functions inherited from apComponent Protected Member Functions inherited from apComponent | |

| void | start (int id) |

| start timers | |

| void | stop (int id, int force=0) |

| stop timers | |

Protected Attributes | |

| int | myrank |

| container for collecting interpolated values | |

| int | np |

| number of processors | |

| int | rec_state |

| record state: 0=initial, 1=recording, 2=recorded | |

| base_t | ip |

| packer of mpi-transfers: interpolation - pair(buffer number, index) | |

| base_t | iplocal |

| packer for local non-mpi transfers: interpolation - pair(buffer number, index) | |

| std::vector< val_t * > | buff |

| destination buffers. first np buffers are reserved as output buffers to other processors | |

| std::vector< val_t * > | buff_vec |

| vector destination buffers. first np buffers are reserved as output buffers to other processors | |

| std::vector< int > | meshids |

| meshid, indicating the need for processing | |

| int | mpidump |

| if 1, then logfile will be recorded | |

| int | myid = 0 |

| global object counter, used in logfile | |

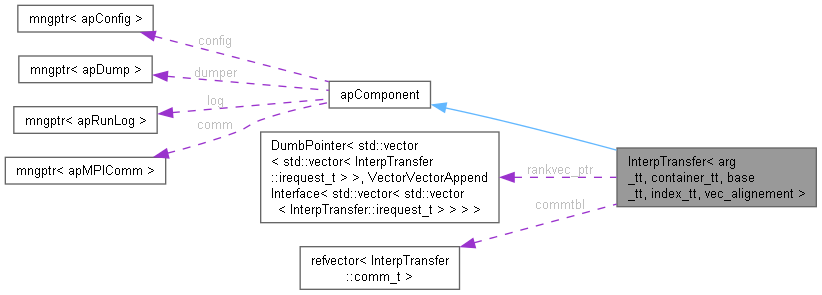

| LocalPointer< std::vector< std::vector< irequest_t > >, VectorVectorAppendInterface< std::vector< std::vector< irequest_t > > > > | rankvec_ptr {&rankvec} |

| vectors of interpolation requests for each processor | |

| refvector< comm_t > | commtbl |

| comm_t structures for each processor | |

| int | nrecv |

| number of those processors where nonzero size data should be sent (received from) | |

| void * | rrequests |

| arrays for MPI_Request of sizes nsend, nrecv | |

| void * | rstatuses |

| arrays for MPI_Status of sizes nsend, nrecv | |

| std::unique_ptr< int[]> | sdispl |

| auxiliary arrays used in transfer_requrest and prepare_transfers | |

| int | blocking_mode = 0 |

| 1 = complete transfers after starting | |

Protected Attributes inherited from apComponent Protected Attributes inherited from apComponent | |

| int | ut |

| if information about this object will be dumped | |

| std::string | name |

| if timers will be used | |

Static Protected Attributes | |

| static int | idcount =0 |

| logfile for mpi-transfers | |

Detailed Description

class InterpTransfer< arg_tt, container_tt, base_tt, index_tt, vec_alignement >

Class for interpolation transfers (for example, between CPU domains in parallel simulation or between meshes in multigrid-approach).

It sends values (for example, electromagnetic field in FDTD method) at chosen space locations to some target memory arrays. These values are in turn obtained as interpolated by mesh. If the target array and the mesh array belong to different CPU domains, then the MPI procedures are used to perform the transfer, otherwise the data is simply copied. This (transfer) class can be applied for different purposes. For example, if the output and input points of some discretized update equation on a mesh belong to different CPU domains, the transfer class sends the value at the input point between these two domains to perform the discretized update equation on a mesh. Transfer class may be used to group all similar transfers together to benefit from MPI non blocking synchronization mechanisms triggered at a right time. The transfer class can also be used if some domain collects data values at chosen points of the calculation volume in order to record them in a file or for further analysis.

Work with object of this class assumes 2 stages.

During the first stage, all the arguments where data values are required, should be registered in the transfer objects along with the target arrays and the indices in these arrays, where the data values should be sent (put_request, put_vrequest). Target arrays are assigned by register_buffer, substitute_buffer. After all arguments are registered, MPI-procedures should be intialized (prepare_transfer).

During the second stage, the transfer object (a) asks meshes which contain the registered arguments to interpolate the data values there, (b) sends these values to the corresponding indices of the target arrays. Local transfers are called by compute_local. MPI-transfers are called by start_tranfer and complete_transfer.

arg_tt is argument type for which value is collected (for example, in EMTL this is structure which contains position, field type and field direction; value is field projection on this direction). container_t is container (mesh or association of meshes) which manages values array. interp_packer_t is packer of interpolations (interpolations are structures for extraction of interpolated values from container). index_t is type for array indexation (int for 32-bit architecture)

WARNING: The template singature changed. To mantain previos behaviour use LegacyInterpTransfer alias. Since aliases can't be instantiated use LegacyInterpTransferHelper to instantiate corresponding InterpTransfer.

Member Function Documentation

◆ put_request()

|

inline |

record argument (arg), index ind at the buffer ibuf, where value (corresponding to arg) should be transfered action:

first bit =1 means overwrite existing data at the buffer, 0 means add !reserved for future use! (second bit =1 means generate async buffer INDICES using container async map) (imlementation: local part is recorded to iplocal and nonlocal part is recorded to rankvec)

◆ register_buffer()

|

inline |

register destination buffer array where transfered data should be collected the pointer bufp is not managed (must be deleted externally)

- Returns

- the buffer index if memory for buffer is not allocated yet, then bufp can be nullptr; after memory is allocated, pointer at the buffer array should be substituted using substitute_buffer bufp_vec is an accompanying aligned pointer; meshid, if nonnegative, indicates, that this is a mesh buffer and copying to it should be processed by container

◆ transfer_requests()

|

protected |

processes requested interpolations (they are recorded at rankvec).

update iplocal for local interpolation, ip and commtbl for mpi-interpolaton. secondary interpolation requests are rerecorded to rankvec

Member Data Documentation

◆ myrank

|

protected |

container for collecting interpolated values

processor rank

The documentation for this class was generated from the following files:

- interp_transfer.h

- interp_transfer.hpp